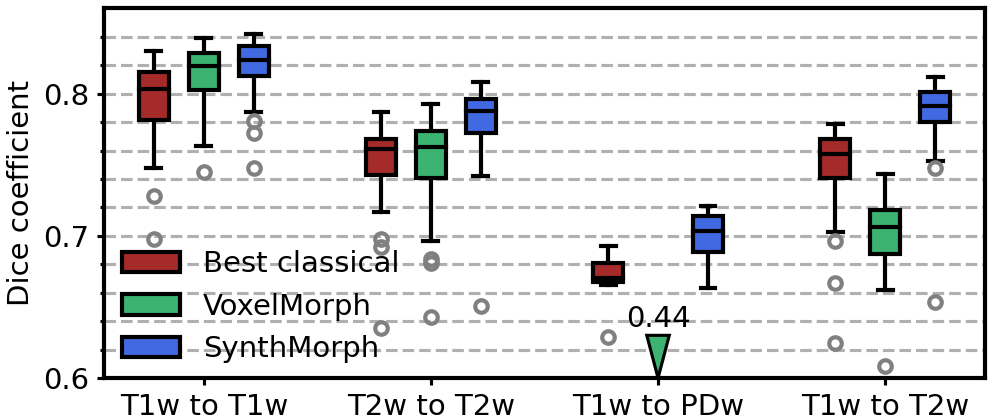

Recent advances in deep learning have dramatically enhanced the accuracy and efficiency of medical image registration. Yet, such algorithms often struggle to generalize beyond the image types they see at training.

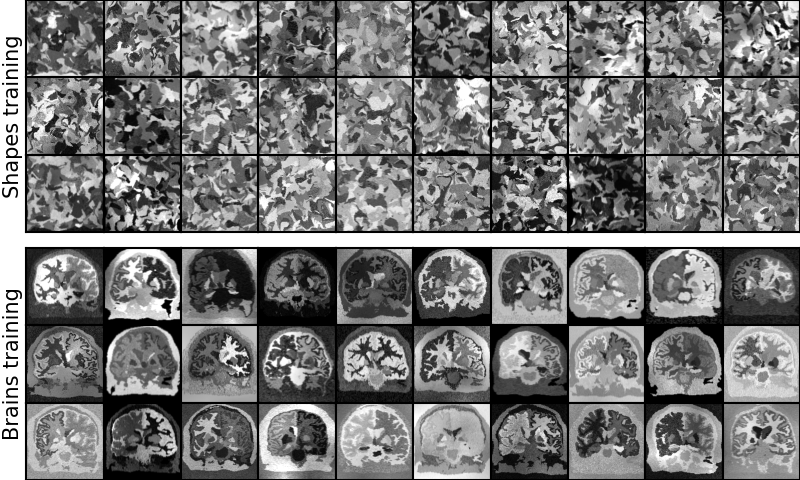

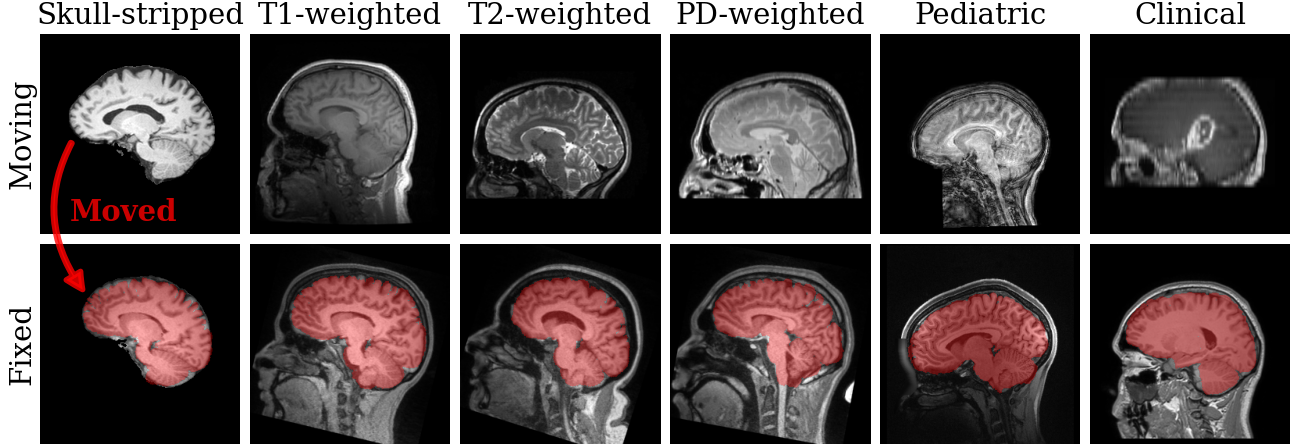

The SynthMorph learning strategy for acquisition-agnostic registration addresses this challenge by exposing networks to wildly variable synthetic data, which leads to unprecedented robustness across a landscape of real image types and greatly alleviates the need for retraining.

SynthMorph is also an easy-to-use registration tool.

SynthMorph is also a tool leveraging the synthesis strategy for rigid, affine, and deformable registration of any brain image right off the MRI scanner, without preprocessing such as skull-stripping. SynthMorph registers images that differ in contrast, shape, or resolution, and you can change the regularization strength at test time.

We maintain a standalone container version of SynthMorph on the Docker Hub, with a script for easy setup and use supporting any of the following container tools: Docker, Podman, Apptainer, or Singularity.

In addition,

FreeSurfer

ships the command-line tool mri_synthmorph. For the latest version,

download a build of the FreeSurfer development branch.

Register a moving to a fixed image using the default joint

affine-deformable transformation model and save the result as

moved.nii.gz:

mri_synthmorph -o moved.nii.gz moving.nii.gz fixed.nii.gz

Estimate and save an affine transform trans.lta

in FreeSurfer LTA format:

mri_synthmorph -m affine -t trans.lta moving.nii.gz fixed.nii.gz

For detailed instructions and information on transforms, run:

mri_synthmorph -h

For those interested in training registration models, we maintain Google Colab notebooks showcasing the SynthMorph learning strategy:

Users who wish to build custom environments can find SynthMorph scripts in FreeSurfer, access the Python requirements for the latest container, browse source code on GitHub, and download the weight files independently:

Several papers describe SynthMorph registration techniques, where * denotes equal contribution. If you use SynthMorph, please cite us (BibTex)!

Joint registration and the tool:

Affine or rigid registration:

Deformable registration and the general synthesis strategy:

Start a discussion, open an issue on GitHub, or reach out to the FreeSurfer list.

We thank Danielle F. Pace for help with computing surface distances. SynthMorph benefited from computational hardware generously provided by the Massachusetts Life Sciences Center.